Since I started mining ethereum almost two years ago, I have found that power distribution is important not just for equipment safety, but also for system stability. When I started mining I thought my rigs should be fine as long as I used a robust server PSU to power the GPUs, with heavy 16 or 18AWG cables. After frying one motherboard and more than a couple ATX PSUs, I've learned a lot of careful design and testing is required.

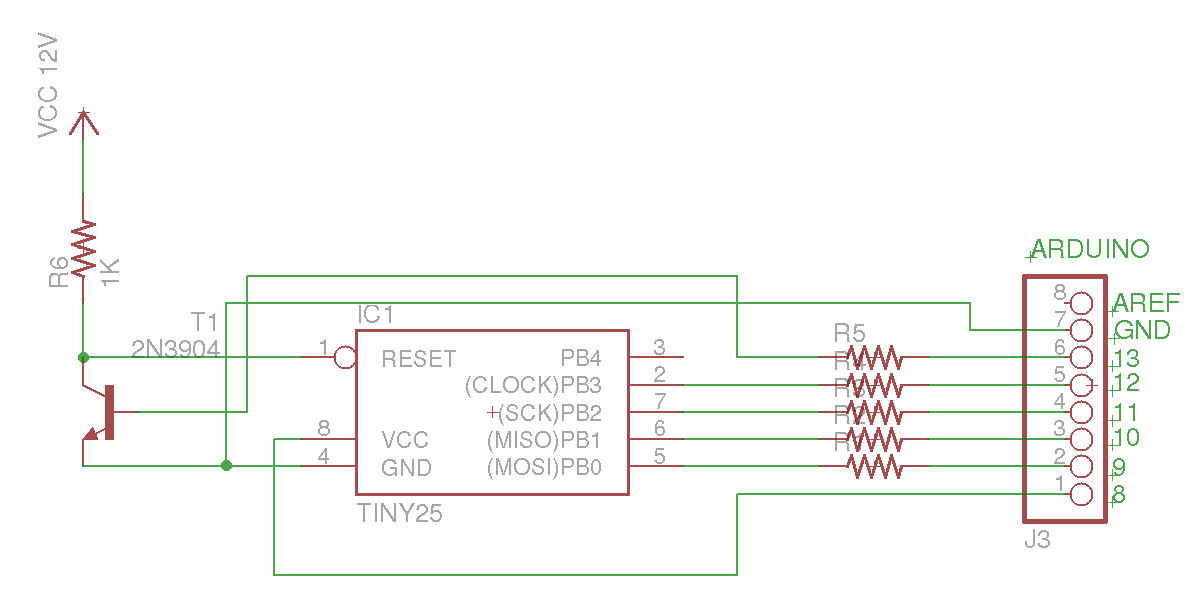

Using Dell, IBM, or HP server power supplies for mining rigs is not a new idea, so I won't go into too much detail about them. I do recommend making an interlock connector so the server PSU turns on at the same time as the motherboard. I also recommend only connecting the server PSU to power the GPU PCIe power connectors, as they are isolated from the 12V supply for the motherboard. If you try to power ribbon risers, the 12V from the ATX and server PSUs will be interconnected and can lead to feedback problems. Server PSUs are very robust and unlikely to be harmed, but I have killed a cheap 450W ATX PSU this way. If you use USB risers, they are isolated from the motherboard's 12V supply, and therefore can be safely powered from the server PSU.

In the photo above, you might notice the grounding wire connecting all the cards, which then connects to a server PSU. I recently added this to the rig after measuring higher current flowing through two of the ground wires connected to the 6-pin PCIe power plugs. As I mentioned in my post about GPU PCIe power connections, there are only two ground pins, with the third ground wire being connected to the sense pin. With two ground pins and three power pins, the ground wires carry 50% more current than the 12V wires. Although the ground wires weren't heating up from the extra current, the connector was. Adding the ground bypass wire reduced the connector temperature to a reasonable level.

For ATX PSUs, I've used a few of the EVGA 500B, and do not recommend them. While even my cheap old 300W power supplies use 18AWG wire for the hard drive power connectors, the SATA and molex power cables on the 500B are only 20AWG. Powering more than one or two risers with a 20AWG cable is a recipe for trouble. I burned the 12V hard drive power wire on two 500B supplies before I realized this. I recently purchased a Rosewill 500W 80plus gold PSU that was on sale at Newegg, and it is much better than the EVGA 500B. The Rosewill uses 18AWG wire in the hard drive cables, and it also has a 12V sense wire in the ATX power connector. This allows it to compensate for the voltage drop in the cable from the PSU to the motherboard. The sense wire is the thinner yellow wire in the photo below.

Speaking of voltage drop, I recommend checking the voltage at the PCIe power connector to ensure it is close to 12V. Most of my cards do not have a back plate, so I can use a multi-meter to measure at the 12V pins of the the power connector where they are soldered to the GPU PCB. I also recommend checking the temperature of power connectors since good quality low-resistance connectors are just as important as heavy gauge wires. Warm connectors are OK, but if they so hot that you can't hold your fingers to them, that's a problem.

My last recommendation is for people in North America (and some other places) where 120V AC power is the norm. Wire up the outlets for your mining rigs for 240 instead of 120. Power supplies are slightly more efficient at 240V, and will draw half as much current compared to 120V. Lower current draw means less line loss going to the power supply and therefore less heat generated in power cords and plugs. Properly designed AC power cables and plugs should never overheat below 10-15 Amps, however I have seen melted and burned connectors at barely over 10A of steady current draw.